Tamlin Love

Researcher, Lecturer, Developer and Musician

HomeAbout Me

Research

Projects

Posters

My CV

Research

| Towards Explainable Proactive Robot Interactions for Groups of People in Unstructured Environments |

|---|

| Tamlin Love, Antonio Andriella, Guillem Alenyà |

|

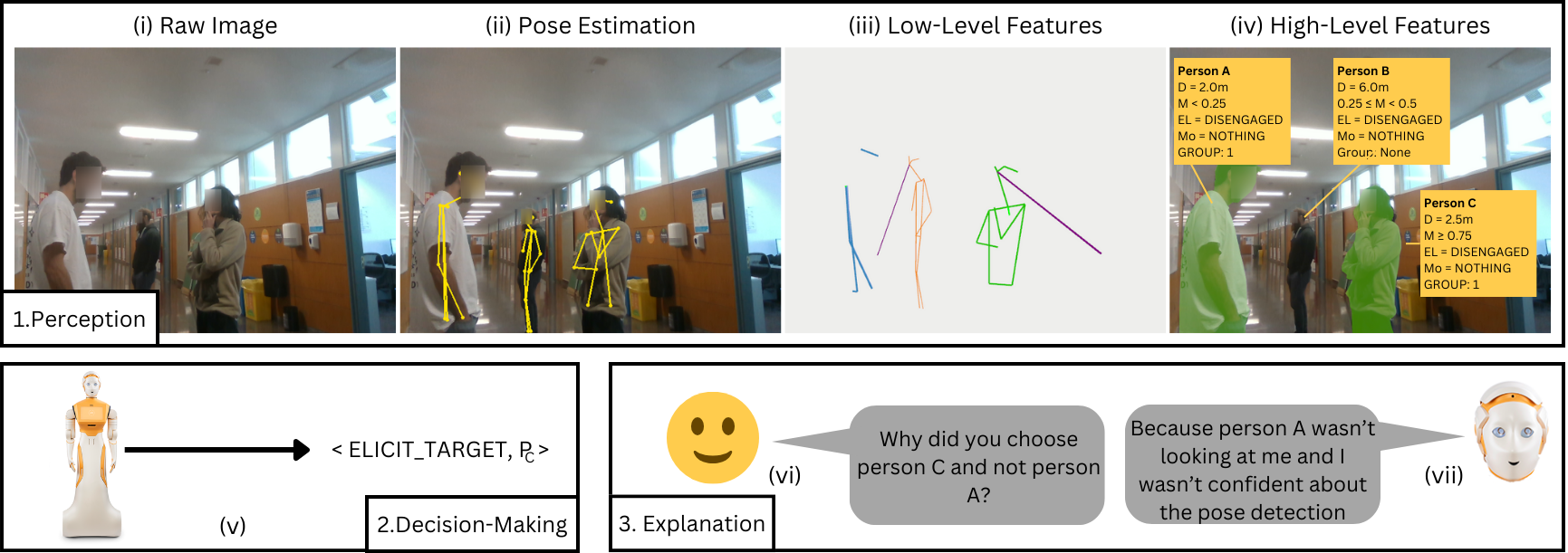

For social robots to be able to operate in unstructured public spaces, they need to be able to gauge complex factors such as human-robot engagement and inter-person social groups, and be able to decide how and with whom to interact. Additionally, such robots should be able to explain their decisions after the fact, to improve accountability and confidence in their behavior. To address this, we present a two-layered proactive system that extracts high-level social features from low-level perceptions and uses these features to make high-level decisions regarding the initiation and maintenance of human robot interactions. With this system outlined, the primary focus of this work is then a novel method to generate counterfactual explanations in response to a variety of contrastive queries. We provide an early proof of concept to illustrate how these explanations can be generated by leveraging the two-layer system. |

| Late Breaking Report @ HRI 2024 |

| Paper · Code · Video |

| ROSARL: Reward-Only Safe Reinforcement Learning |

|---|

| Geraud Nangue Tasse, Tamlin Love, Mark Nemecek, Steven James, Benjamin Rosman |

|

An important problem in reinforcement learning is designing agents that learn to solve tasks safely in an environment. A common solution is for a human expert to define either a penalty in the reward function or a cost to be minimised when reach- ing unsafe states. However, this is non-trivial, since too small a penalty may lead to agents that reach unsafe states, while too large a penalty increases the time to convergence. Additionally, the difficulty in designing reward or cost functions can increase with the complexity of the problem. Hence, for a given environment with a given set of unsafe states, we are interested in finding the upper bound of rewards at unsafe states whose optimal policies minimises the probability of reaching those unsafe states, irrespective of task rewards. We refer to this exact upper bound as the Minmax penalty, and show that it can be obtained by taking into account both the controllability and diameter of an environment. We provide a simple practical model-free algorithm for an agent to learn this Minmax penalty while learning the task policy, and demonstrate that using it leads to agents that learn safe policies in high-dimensional continuous control environments. |

| Under review |

| Paper · Code |

| Facilitating Safe Sim-to-Real through Simulator Abstraction and Zero-shot Task Composition |

|---|

| Tamlin Love, Devon Jarvis, Geraud Nangue Tasse, Branden Ingram, Steven James, Benjamin Rosman |

|

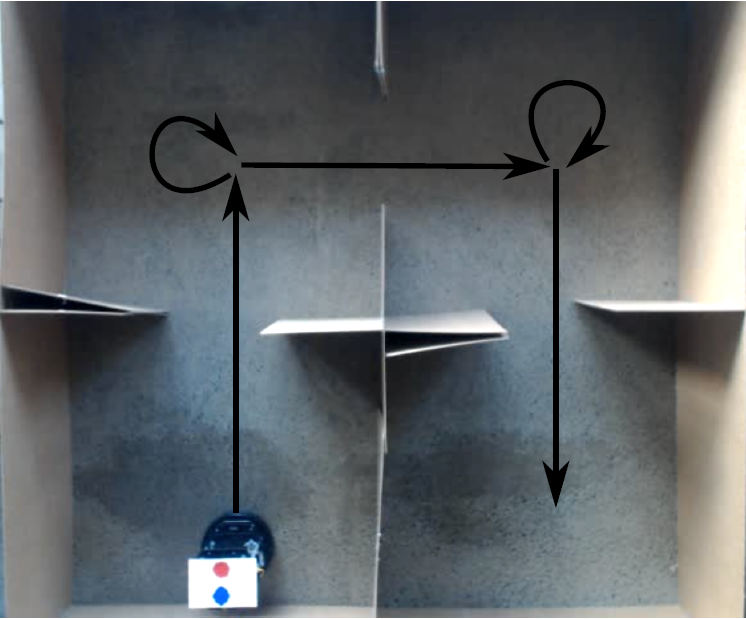

Simulators are a fundamental part of training robots to solve complex control and navigation tasks. This is due to the speed and safety they offer in comparison to training directly on a physical system, where exploration may drive the system towards dangerous action for itself and its environment. However, simulators have a fundamental drawback known as the “reality gap”, which describes the discrepancy in performance which occurs when a robot trained in simulation performs the same task in the real world. The reality gap is prohibitive as it means many of the most powerful recent advances in reinforcement learning (RL) cannot be used with robots due to their high sample complexity which makes physical training infeasible. In this work we introduce a framework for applying high sample complexity RL algorithms to robots by leveraging recent advances in hierarchical RL and skill composition. We demonstrate that adapting hierarchical RL techniques allows us to close the reality gap at multiple levels of abstraction. As a result we are able to train a robot to perform combinatorially many tasks within a domain with minimal training on a physical system or steps of error correction. We believe this work provides an important starting framework for applying hierarchical RL to perform sim-to-real generalisation at multiple levels of abstraction. |

| Workshop on Lifelong Learning of High-level Cognitive and Reasoning Skills @ IROS, 2022 |

| Paper · Video · Code |

| Who should I trust? Cautiously learning with unreliable experts |

|---|

| Tamlin Love, Ritesh Ajoodha and Benjamin Rosman |

|

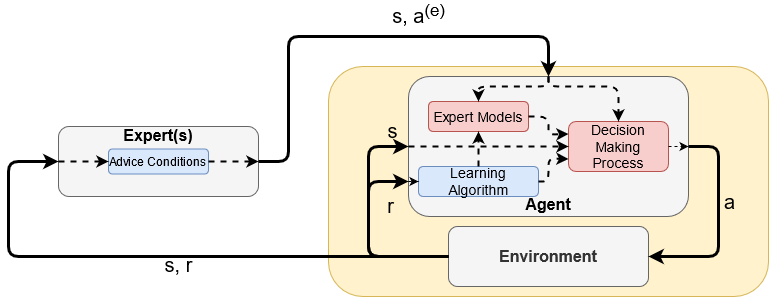

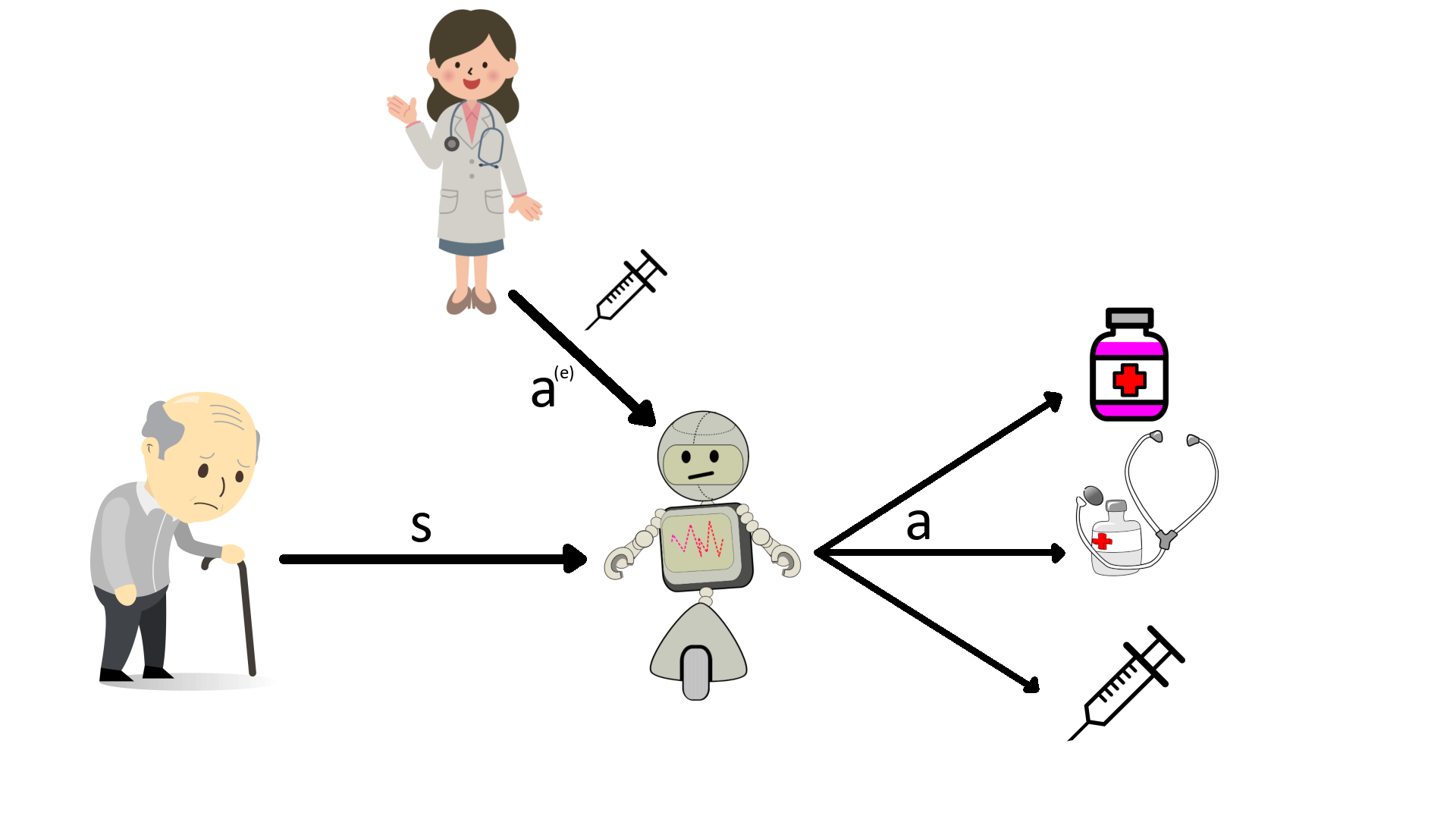

An important problem in reinforcement learning is the need for greater sample efficiency. One approach to dealing with this problem is to incorporate external information elicited from a domain expert in the learning process. Indeed, it has been shown that incorporating expert advice in the learning process can improve the rate at which an agent’s policy converges. However, these approaches typically assume a single, infallible expert; learning from multiple and/or unreliable experts is considered an open problem in assisted reinforcement learning. We present CLUE (cautiously learning with unreliable experts), a framework for learning single-stage decision problems with action advice from multiple, potentially unreliable experts that augments an unassisted learning with a model of expert reliability and a Bayesian method of pooling advice to select actions during exploration. Our results show that CLUE maintains the benefits of traditional approaches when advised by reliable experts, but is robust to the presence of unreliable experts. When learning with multiple experts, CLUE is able to rank experts by their reliability and differentiate experts based on their reliability. |

| Neural Computing and Applications, 2022 |

| Paper · Supplementary Material · Code |

| Learning Who to Trust: Policy Learning in Single-Stage Decision Problems with Unreliable Expert Advice |

|---|

| Tamlin Love, Ritesh Ajoodha and Benjamin Rosman |

|

Work in the field of Assisted Reinforcement Learning (ARL) has shown that the incorporation of external information in problem solving can greatly increase the rate at which learners can converge to an optimal policy and aid in scaling algorithms to larger, more complex problems. However, these approaches rely on a single, reliable source of information; the problem of learning with information from multiple and/or unreliable sources of information is still an open question in ARL.We present CLUE (Cautiously Learning with Unreliable Experts), a framework for learning single-stage decision problems with policy advice from multiple, potentially unreliable experts. We compare CLUE against an unassisted agent and an agent that naÏvely follows advice, and our results show that CLUE exhibits faster convergence than an unassisted agent when advised by reliable experts, but is nevertheless robust against incorrect advice from unreliable experts. |

| 2022, MSc Thesis |

| Thesis · Code |

| Work published in Neural Computing and Applications 2022 |

| Work featured in Multi-disciplinary Conference on Reinforcement Learning and Decision Making 2022 (Extended Abstract · Poster) |

| Work featured in Workshop on Human-aligned Reinforcement Learning for Autonomous Agents and Robots 2021 (Paper) |

| Building Undirected Influence Ontologies Using Pairwise Similarity Functions |

|---|

| Tamlin Love and Ritesh Ajoodha |

|

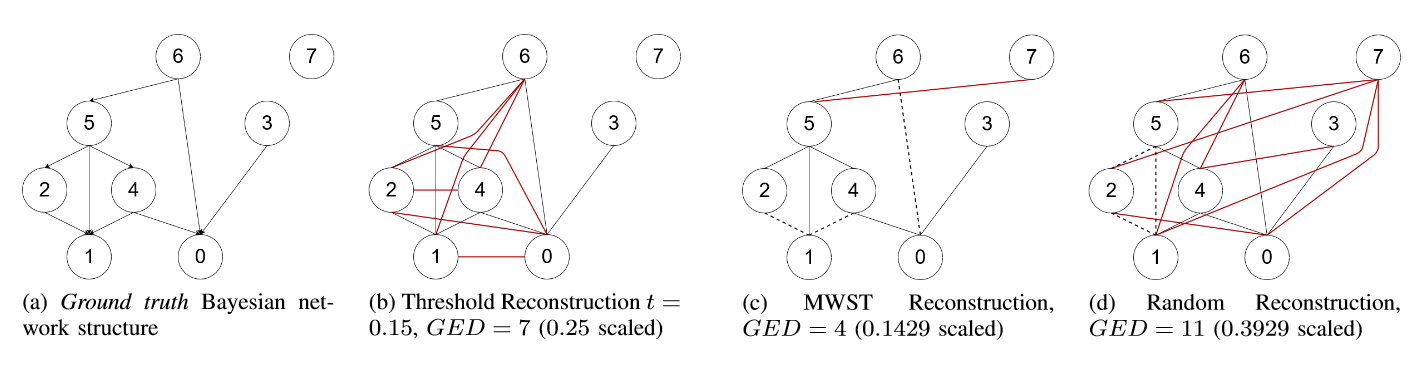

The recovery of influence ontology structures is a useful tool within knowledge discovery, allowing for an easy and intuitive method of graphically representing the influences between concepts or variables within a system. The focus of this research is to develop a method by which undirected influence structures, here in the form of undirected Bayesian network skeletons, can be recovered from observations by means of some pairwise similarity function, either a statistical measure of correlation or some problem-specific measure. In this research, we present two algorithms to construct undirected influence structures from observations. The first makes use of a threshold value to filter out relations denoting weak influence, and the second constructs a maximum weighted spanning tree over the complete set of relations. In addition, we present a modification to the minimum graph edit distance (GED), which we refer to as the modified scaled GED, in order to evaluate the performance of these algorithms in reconstructing known structures. We perform a number of experiments in reconstructing known Bayesian network structures, including a real-world medical network. Our analysis shows that these algorithms outperform a random reconstruction (modified scaled GED ≈ 0.5), and can regularly achieve modified scaled GED scores better than 0.3 in sparse cases and 0.45 in dense cases. We argue that, while these methods cannot replace traditional Bayesian network structure-learning techniques, they are useful as computationally cheap data exploration tools and in knowledge discovery over structures which cannot be modelled as Bayesian networks. |

| 2020 International SAUPEC/RobMech/PRASA Conference |

| Paper · Poster |